Ongoing

2023 - Present

Silent Speech Interface Using Earbuds

A silent speech interface using consumer earbuds with ultrasonic sensing to recognize silently spelled words, enabling hands-free and voice-free text input with user authentication.

Earable Computing

Silent Speech

HCI

Overview

Existing wearable silent speech interaction (SSI) systems typically require custom devices and are limited to a small lexicon. This project develops SSI systems using consumer earbuds, enabling discreet text input without hands or audible vocalization. Our research addresses two key challenges: (1) generalizing recognition to words not seen during training and (2) verifying speaker identity alongside speech recognition.

Approach

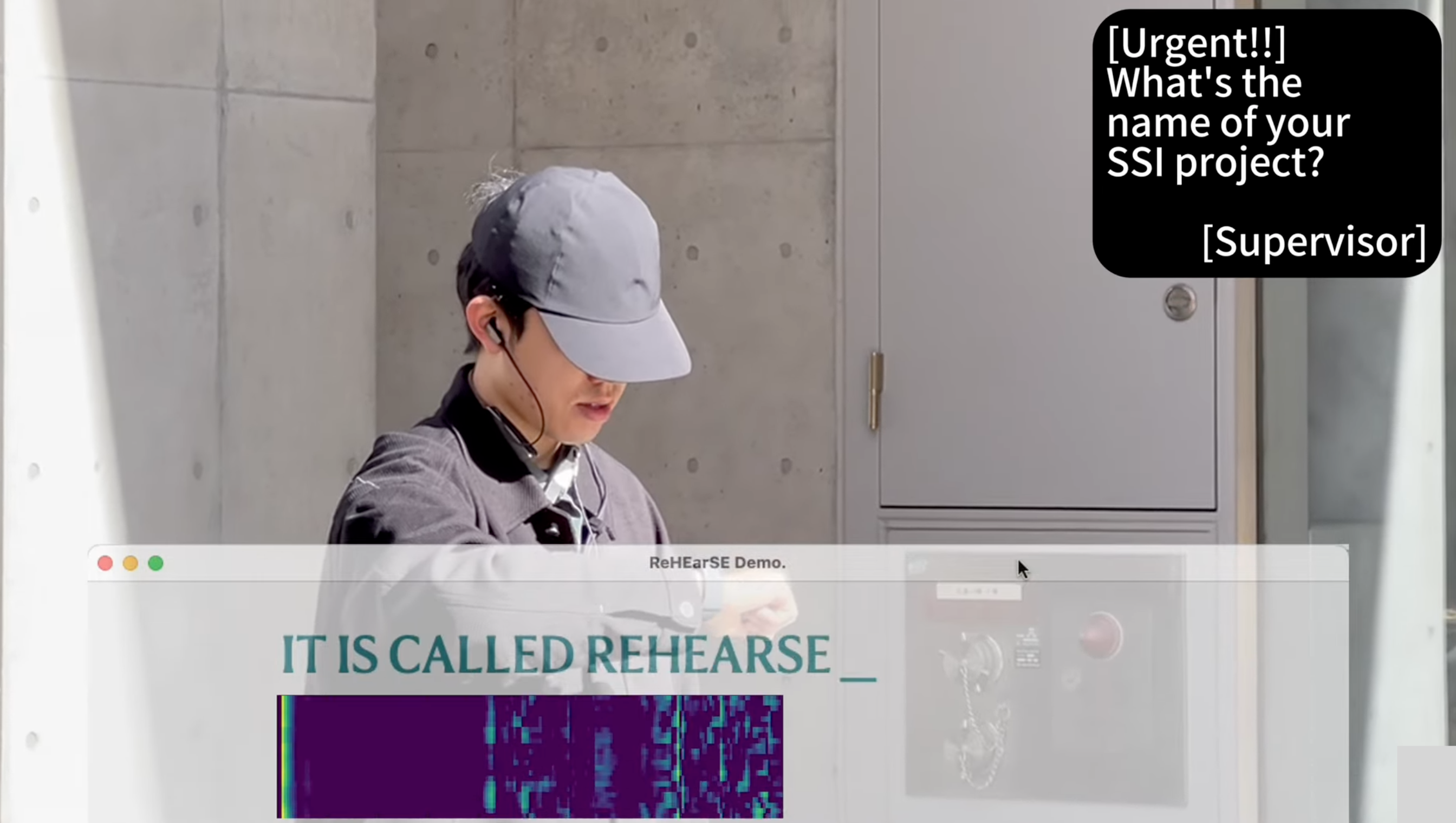

- ReHEarSSE (CHI 2024): An earbud-based ultrasonic SSI system that detects subtle changes in ear canal shape using ultrasonic reflections and autoregressive features as users silently spell words. A deep learning model trained with connectionist temporal classification (CTC) loss and an intermediate embedding for letters and transitions enables generalization to unseen words

- HEar-ID (UbiComp 2025, Best Poster Award): Extends ReHEarSSE by jointly performing user authentication and silent speech recognition using a single model on consumer active noise-canceling earbuds. Low-frequency whisper audio and high-frequency ultrasonic reflections pass through a shared encoder, with a contrastive learning branch for authentication and an SSI head for spelling recognition

Results

- ReHEarSSE: Achieved 89.3% recognition accuracy on words not in the training set, supporting nearly an entire dictionary's worth of vocabulary

- HEar-ID: Enables decoding of 50 words while reliably rejecting impostors, demonstrating strong spelling accuracy and robust authentication on commodity earbuds

Significance

This research demonstrates that consumer earbuds can serve as a practical platform for hands-free, voice-free text input. By overcoming the vocabulary limitation of existing SSI systems and integrating user authentication, this work paves the way for secure and scalable silent speech interfaces in everyday settings.